Is your Internet connectivity REALLY redundant?

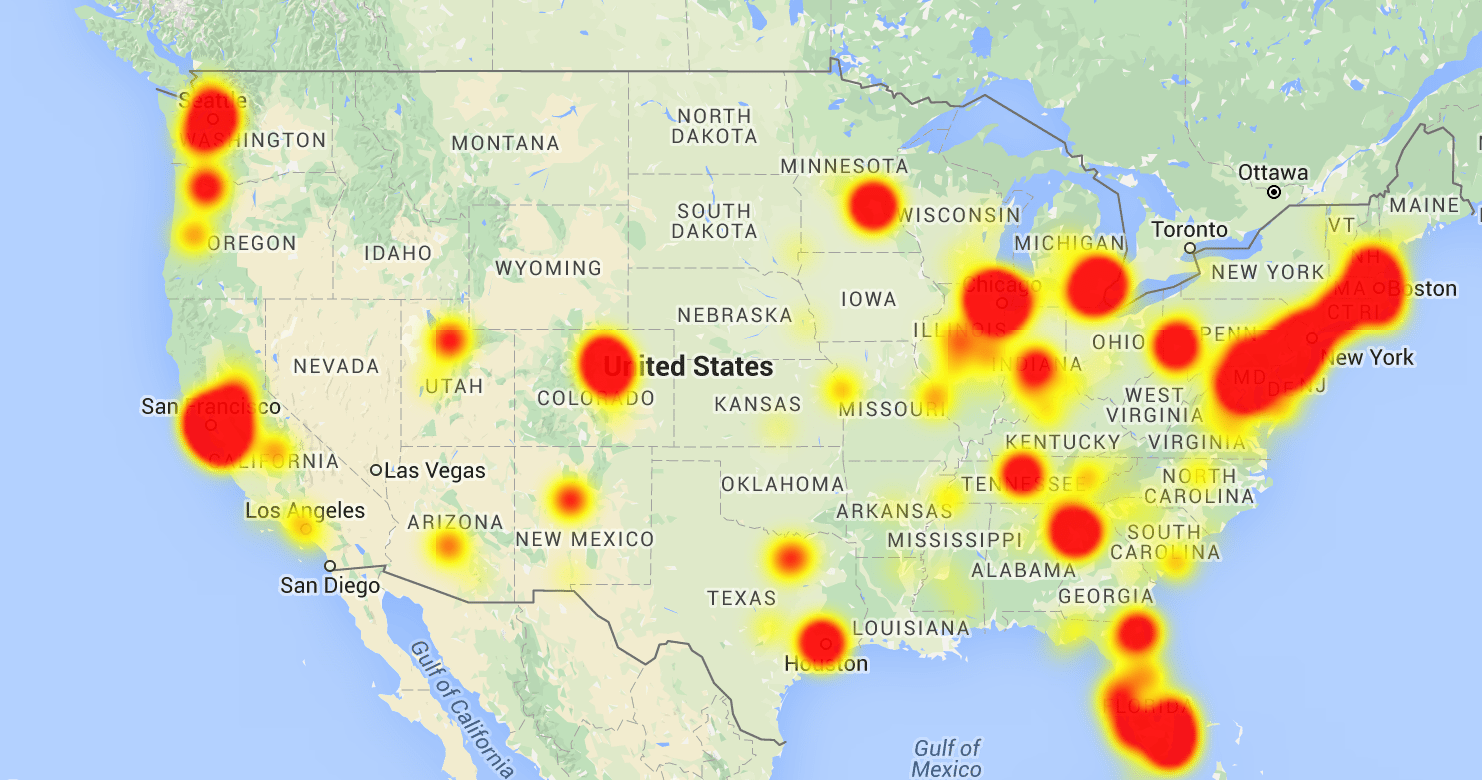

The CenturyLink/Level3 internet outage on August 30th, 2020 got a lot of network engineers thinking about internet reachability and the ways things can go wrong. The way this particular failure played out was unique and definitely gave us all a lot to consider in the way of oddball failure scenarios. Problems started for CenturyLink/Level3 when a BGP Flowspec announcement came from a datacenter in Mississauga, Ontario in Canada. Flowspec, a security mechanism within BGP, is commonly used to filter large volumetric distributed denial of service (DDoS) attacks within a network. The root cause of this particular issue appears to be operator error by way of a CenturyLink engineer being allowed to put a wildcard entry into a Flowspec command to block such an attack. This misformatted entry caused many more IP addresses than intended to be filtered, wreaking havoc on the CenturyLink/Level3 backbone within Autonomous System (AS) 3356. BGP sessions were torn down by the rule because of filtering across the backbone causing instability and reachability issues throughout.

One very interesting bit about how things failed was what happened when other networks tried to shutdown their BGP sessions to AS 3356. CenturyLink/Level3 didn’t stop propagating prefixes/IP address blocks even after the BGP sessions were shut down. This made the BGP speakers still connected to AS 3356 think it was a valid path to reach said prefixes/IP addresses but it was not any longer. This traffic was then “blackholed” within the CenturyLink/Level3 backbone because there was no longer an exit point to reach the IP addresses. So not only could you not use the backbone during the disruption, the failure actually could have prevented those who proactively disconnected from CenturyLink/Level3 to be able to utilize alternate paths they might be connected to.

So a question comes to mind of many network engineers examining the post-mortem of this event: How can I can I make sure my network is not affected if this happens again? There are a few things that come to mind as items to take into consideration:

SD-WAN – Now mainstream and very mature, SD-WAN is a fantastic way to overcome connectivity issues over the Internet. Because probes are sent periodically to measure path performance, the right SD-WAN solution could route around performance problems on a network. An SD-WAN overlay alone can’t resolve every issue but combined with some of the other recommendations here, certainly gives you greater resilience.

Autonomous System Diversity – When designing internet connectivity resilience, the goal is to make the links you have as independent from one another as you can. The autonomous system paths of the providers you select is important to examine to be sure they do not depend on one another for transit. A great tool to assist with this is CAIDA’s ASRank which is helpful to to see ASN relationships with one another. Take a look at the ASN of the providers you are considering to see their relationship to one another. In particular, you likely want to avoid the two ASes having a “customer” or “provider” relationship. Ideally, you’ll want them to be a “peer”. Unfortunately that doesn’t 100% guarantee you won’t be affected by something like what happened on August 30th to AS3356 basically still advertising and blackholing but it will get you about as close as you can get to the ASNs not having inter-dependence on one another and have a better chance of survivability.

Three Connections or More – Many with redundant Internet connections assume two connections are enough. I would contend that having a third connection, even if it’s a backup only connection via 4G/5G over a wireless carrier, can save your bacon if the other two carriers are affected by the same outage.

IXPs, CXPs and Cloud Direct Connections – You may want to consider peering into one of the following:

- Internet Exchange Point (IXP) – You’ll find IXPs all over the world as a means to inexpensively peer networks directly in a multilateral or bilateral peering arrangement. With multilateral peering, you connect to a route server with one BGP peering session then send and receive all routes with anyone connected to the route server. Bilateral peering is a direct BGP peering relationship with another entity on the exchange. These allow a network to directly connect to regional network connections without the need for transit saving money, latency and improving overall performance. Quick plug: I work with the Ohio IX so if you’re in Ohio, I highly recommend checking them out.

- Cloud Exchange Points (CXP) or Direct Cloud Connections – As the public cloud becomes more important to IT infrastructures, finding a way to stay directly connected to these resources becomes critical. Like connecting to an IXP, connecting to a CXP or Direct Cloud Connection to get to key cloud providers is another opportunity to not just improve redundancy but performance as well.

In closing, it’s difficult to plan for every type of network failure that can occur. This most recent CenturyLink/Level3 outage was one for the books, that’s for sure. All we can do as network engineers is learn from it and strive to build better networks from the lessons we take away.

Thanks for reading! If you’re an Ohio network engineer, be sure to check out a couple of organizations I’m involved with: (OH)NUG and Ohio IX. I might be a little biased but feel they are great resources right in our backyard!